Enhance Your GoPro Videos with Telemetry Data Overlays Using WebCodecs API

Published on July 2, 2024

Last updated on September 17, 2024

16 min read

GoPro cameras are renowned for capturing dynamic visuals, but they also store valuable telemetry data like speed and GPS coordinates. By extracting and overlaying this data onto your video, you can add a layer of information that enriches the viewing experience for analysis or storytelling purposes. Utilizing the gopro-telemetry.js library, we can seamlessly integrate this telemetry data into our videos, making them not only visually appealing but also informatively rich.

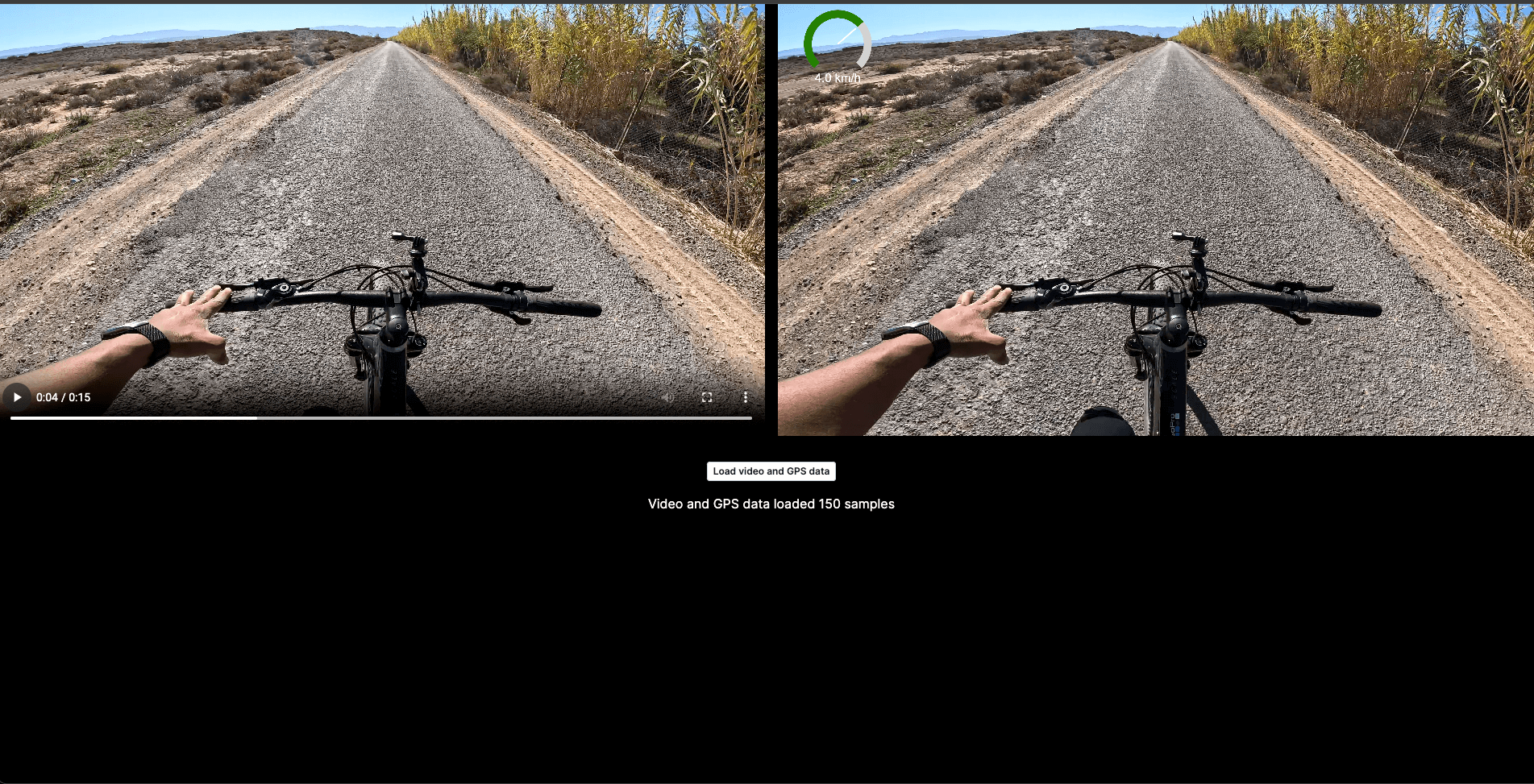

That means you can take a video that looks like this:

And turn it into this.

Let us embark on our journey.

Initial setup

Ensure you have the following prerequisites ready for a smooth start:

- Next.js environment (v14+ recommended) with TypeScript and TailwindCSS

- gopro-telemetry and gpmf-extract packages for data extraction

Begin by creating a new Next.js project:

npx create-next-app@latest next-video-gopro

cd next-video-goproExtracting Telemetry Data

Install the necessary packages to handle telemetry data:

npm install gpmf-extract gopro-telemetryUtilize Next.js server-side capabilities to process the telemetry data without needing an external server setup.

Preparing the Video and Data Integration

Create a user-friendly interface with a video element and a button to load the GoPro video and its telemetry data dynamically:

"use client";

import { useRef } from "react";

export default function Home() {

const videoRef = useRef<HTMLVideoElement | null>(null);

const loadVideoGPSData = async () => {

const video = videoRef.current;

if (video) {

video.src = "GoPro.mp4";

}

};

return (

<div>

<div className="grid grid-cols-2 gap-4">

<video

style={{

height: "auto",

objectFit: "contain",

}}

ref={videoRef}

controls

></video>

</div>

<div className="flex flex-col align-middle items-center gap-4 mt-8">

<button

onClick={loadVideoGPSData}

type="button"

className="rounded bg-white px-2 py-1 text-xs font-semibold text-gray-900 shadow-sm ring-1 ring-inset ring-gray-300 hover:bg-gray-50"

>

Load video and GPS data

</button>

</div>

</div>

);

}Extracting Telemetry Data

After setting up your project, install the gopro-telemetry and gpmf-extract packages for telemetry data extraction:

npm install gpmf-extract gopro-telemetryTo utilize these packages in a server environment, leverage Next.js server actions, allowing for backend processes without a separate server. Begin by organizing your project structure to accommodate server actions:

mkdir src/app/components- Create

home.tsxfile into components folder:

touch src/app/components/home.tsx- Paste this code into

home.tsx:

"use client";

import { useRef } from "react";

export default function Home() {

const videoRef = useRef<HTMLVideoElement | null>(null);

const loadVideoGPSData = async () => {

const video = videoRef.current;

if (video) {

video.src = "GoPro.mp4";

}

};

return (

<div>

<div className="grid grid-cols-2 gap-4">

<video

style={{

height: "auto",

objectFit: "contain",

}}

ref={videoRef}

controls

></video>

</div>

<div className="flex flex-col align-middle items-center gap-4 mt-8">

<button

onClick={loadVideoGPSData}

type="button"

className="rounded bg-white px-2 py-1 text-xs font-semibold text-gray-900 shadow-sm ring-1 ring-inset ring-gray-300 hover:bg-gray-50"

>

Load video and GPS data

</button>

</div>

</div>

);

}- Update your page.tsx to include server-side functionality for telemetry data extraction:

import Home from "./components/home";

import gpmfExtract from "gpmf-extract";

import goproTelemetry from "gopro-telemetry";

import fs from "fs";

import path from "path";

export default function Page() {

async function getTelemetryData(videoFile: string) {

"use server";

return new Promise((resolve, reject) => {

const file = fs.readFileSync(path.join("public", videoFile));

gpmfExtract(file)

.then((extracted) => {

goproTelemetry(extracted, {}, (telemetry) => {

resolve(telemetry);

});

})

.catch((error) => {

reject(error);

});

});

}

return <Home getTelemetryData={getTelemetryData} />;

}In the code mentioned above, we used Next.js server action to get GoPro telemetry data by video file path (our public folder path) and return the data as a promise. Also, we pass the function as props to our Home page component.

After that, we need to call our getTelemetryData function from our home page component home.tsx . So, we pass the function as props and call it when clicking on the button to load the video and GPS data. After the promise is resolved, we store the GPS data in the GPS data state to use it later on.

"use client";

import { useRef, useState } from "react";

type GPSData = {

samples: PurpleSample[];

name: string;

units: string;

};

interface PurpleSample {

value: number[];

cts: number;

date: string;

sticky?: { [key: string]: number };

}

export default function Home({

getTelemetryData,

}: {

getTelemetryData: (videoFile: string) => Promise<any>;

}) {

const videoRef = useRef<HTMLVideoElement | null>(null);

const [gpsData, setGpsData] = useState<GPSData | null>(null);

const messageRef = useRef<HTMLParagraphElement | null>(null);

const loadVideoGPSData = async () => {

const video = videoRef.current;

const videoURL = "GoPro.mp4";

if (video) {

video.src = videoURL;

const telemetryData = await getTelemetryData(videoURL);

if (telemetryData[1].streams.GPS5) {

setGpsData((prevGpsData) => {

// Update state based on previous state

const updatedGpsData = telemetryData[1].streams.GPS5;

// Update message using the updated state

messageRef.current!.innerText =

"Video and GPS data loaded " +

updatedGpsData.samples.length +

" samples";

return updatedGpsData;

});

}

}

};

return (

<div>

<div className="grid grid-cols-2 gap-4">

<video

style={{

height: "auto",

objectFit: "contain",

}}

ref={videoRef}

controls

></video>

</div>

<div className="flex flex-col align-middle items-center gap-4 mt-8">

<button

onClick={loadVideoGPSData}

type="button"

className="rounded bg-white px-2 py-1 text-xs font-semibold text-gray-900 shadow-sm ring-1 ring-inset ring-gray-300 hover:bg-gray-50"

>

Load video and GPS data

</button>

<p ref={messageRef}></p>

</div>

</div>

);

}Then we would like to redraw each frame into a canvas; we can later on draw the speedometer into the video.

We add event listeners to video elements for playing and pausing. When the video is playing, we’ll draw the video's current frame into the Canvas using the drawImage function in the Canvas API. Using the requestAnimationFrame function to listen for animation and redraw again into the Canvas.

"use client";

import { useRef, useState, useEffect } from "react";

type GPSData = {

samples: PurpleSample[];

name: string;

units: string;

};

interface PurpleSample {

value: number[];

cts: number;

date: string;

sticky?: { [key: string]: number };

}

export default function Home({

getTelemetryData,

}: {

getTelemetryData: (videoFile: string) => Promise<any>;

}) {

const videoRef = useRef<HTMLVideoElement | null>(null);

const [gpsData, setGpsData] = useState<GPSData | null>(null);

const messageRef = useRef<HTMLParagraphElement | null>(null);

const canvasRef = useRef<HTMLCanvasElement | null>(null);

const loadVideoGPSData = async () => {

const video = videoRef.current;

const videoURL = "GoPro.mp4";

if (video) {

video.src = videoURL;

const telemetryData = await getTelemetryData(videoURL);

if (telemetryData[1].streams.GPS5) {

setGpsData((prevGpsData) => {

// Update state based on previous state

const updatedGpsData = telemetryData[1].streams.GPS5;

// Update message using the updated state

messageRef.current!.innerText =

"Video and GPS data loaded " +

updatedGpsData.samples.length +

" samples";

return updatedGpsData;

});

}

}

};

let animationFrameId: number | null = null;

const renderVideoOnCanvas = (speed: number = 0): void => {

const canvas = canvasRef.current;

if (!canvas) return;

const ctx = canvas?.getContext("2d", {

willReadFrequently: true,

});

if (!ctx) return;

const video = videoRef.current;

if (!video) return;

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

ctx?.drawImage(video, 0, 0, canvas.width, canvas.height);

if (speed) {

const width = 600; // Increase width for a larger speedometer

const centerX = width - 300; // Position in the top-right corner

const centerY = 200; // Adjust vertical position

const radius = 150; // Increase the radius for a larger speedometer arc

const arrowLength = radius * 0.8;

// Draw speedometer background

ctx.beginPath();

ctx.arc(centerX, centerY, radius, 0.75 * Math.PI, 0.25 * Math.PI);

ctx.lineWidth = 40; // Increased width of the speedometer arc

ctx.strokeStyle = "lightgrey";

ctx.stroke();

// Draw speedometer progress

const progress = (speed / 180) * Math.PI - 0.25 * Math.PI;

ctx.beginPath();

ctx.arc(centerX, centerY, radius, 0.75 * Math.PI, progress);

ctx.lineWidth = 40; // Increased width of the progress arc

ctx.strokeStyle = "green";

ctx.stroke();

// Draw speedometer arrow

ctx.beginPath();

const arrowX = centerX + arrowLength * Math.cos(progress);

const arrowY = centerY + arrowLength * Math.sin(progress);

ctx.moveTo(centerX, centerY);

ctx.lineTo(arrowX, arrowY);

ctx.lineWidth = 8; // Adjust arrow width

ctx.strokeStyle = "white";

ctx.stroke();

// Draw speedometer text

ctx.font = "60px Arial"; // Increase font size for text

ctx.fillStyle = "white";

ctx.textAlign = "center";

ctx.fillText(speed.toFixed(1) + " km/h", centerX, centerY + radius + 40); // Adjust text position

}

};

// Function to get speed for the nearest date to the video's current time

const getSpeedForNearestDate = (currentTime: number): number | undefined => {

let closestSpeed: number | undefined = undefined;

if (gpsData?.samples[0].date) {

const startDate = new Date(gpsData?.samples[0].date).getTime();

gpsData?.samples.forEach((sample) => {

const sampleDate = new Date(sample.date).getTime();

if ((sampleDate - startDate) / 1000 - currentTime <= 1) {

closestSpeed = sample.value[3] as number;

}

});

}

return closestSpeed;

};

const updateCanvas = () => {

const video = videoRef.current;

if (!gpsData) {

messageRef.current!.innerText = "No GPS data loaded";

}

if (video && gpsData) {

const currentTime = video.currentTime;

const speed = getSpeedForNearestDate(currentTime);

if (speed !== undefined) {

renderVideoOnCanvas(speed);

}

}

animationFrameId = requestAnimationFrame(updateCanvas);

};

const handleVideoPlay = () => {

if (!animationFrameId) {

animationFrameId = requestAnimationFrame(updateCanvas);

}

};

const handleVideoPause = () => {

if (animationFrameId) {

cancelAnimationFrame(animationFrameId);

animationFrameId = null;

}

};

useEffect(() => {

const video = videoRef.current;

if (video) {

video.addEventListener("play", handleVideoPlay);

video.addEventListener("pause", handleVideoPause);

return () => {

video.removeEventListener("play", handleVideoPlay);

video.removeEventListener("pause", handleVideoPause);

};

}

}, [gpsData?.samples]);

return (

<div>

<div className="grid grid-cols-2 gap-4">

<video

style={{

height: "auto",

objectFit: "contain",

}}

ref={videoRef}

controls

></video>

<canvas

style={{

height: "auto",

objectFit: "contain",

width: "100%",

}}

ref={canvasRef}

/>

</div>

<div className="flex flex-col align-middle items-center gap-4 mt-8">

<button

onClick={loadVideoGPSData}

type="button"

className="rounded bg-white px-2 py-1 text-xs font-semibold text-gray-900 shadow-sm ring-1 ring-inset ring-gray-300 hover:bg-gray-50"

>

Load video and GPS data

</button>

<p ref={messageRef}></p>

</div>

</div>

);

}Congratulations, you draw your first video overlay elements using telemetry data. Here’s a screenshot of what you’ll get at the current stage when playing the video.

Convert Canvas into an MP4 Video

- Install mp4-muxer package

npm install mp4-muxer- Import

mp4-muxerpackage intopage.tsx:

import { Muxer, ArrayBufferTarget } from "mp4-muxer";- In the main function, set up the Video Muxer from mp4-muxer and video encoder to use it later on:

let videoFramerate = 30; // Video frame rate (e.g., 30 FPS)

let lastFrameTime = 0; // Variable to track the last captured frame time

let framesGenerated = 0; // Variable to track the number of frames generated

let startTime = 0; // Variable to track the start time of the video

let lastKeyFrame = 0; // Variable to track the last key frame time

const muxer = new Muxer({

target: new ArrayBufferTarget(), // Define the output target (in this case, an ArrayBufferTarget)

video: {

codec: "avc",

width: 1920,

height: 1080,

},

// Puts metadata to the start of the file. Since we're using ArrayBufferTarget anyway, this makes no difference

// to memory footprint.

fastStart: "in-memory",

});

const encoder = new VideoEncoder({

output: (chunk, meta) => muxer.addVideoChunk(chunk, meta),

error: (e) => console.error(e),

});

encoder.configure({

codec: "avc1.640028", // H.264 codec with a high profile for 4K (Level 6.2)

width: 1920,

height: 1080,

bitrate: 40000000, // Bitrate (adjust as needed)

avc: { format: "avc" },

framerate: videoFramerate,

bitrateMode: "constant",

});- Update the updateCanvas function to convert the canvas into a video frame with a timestamp and close the video frame after encoding the frame into the video.

const updateCanvas = () => {

const video = videoRef.current;

if (!video) return;

const currentTime = video.currentTime;

const speed = getSpeedForNearestDate(currentTime);

const canvas = canvasRef.current;

if (speed !== undefined) {

renderVideoOnCanvas(speed);

}

if (canvas) {

let elapsedTime = video.currentTime - startTime;

let frame = new VideoFrame(canvas, {

timestamp: (framesGenerated * 1e6) / videoFramerate,

});

framesGenerated++;

// Ensure a video key frame at least every 10 seconds for good scrubbing

let needsKeyFrame = elapsedTime - lastKeyFrame >= 10000;

if (needsKeyFrame) lastKeyFrame = elapsedTime;

encoder.encode(frame, { keyFrame: needsKeyFrame });

frame.close();

lastFrameTime += (video.currentTime * 1e6) / videoFramerate;

}

animationFrameId = requestAnimationFrame(updateCanvas);

};- Add a button to the return function to create a video from the canvas:

<button

type="button"

onClick={createVideoFromCanvasFrames}

className="rounded bg-indigo-600 px-2 py-1 text-sm font-semibold text-white shadow-sm hover:bg-indigo-500 focus-visible:outline focus-visible:outline-2 focus-visible:outline-offset-2 focus-visible:outline-indigo-600"

>

Create a video from the canvas

</button>- Set up the click handler function for the button above. Add an event listener to the video element when the video ends, run flush from the video encoder, finalize the muxer , and get the buffer data:

const createVideoFromCanvasFrames = async () => {

const video = videoRef.current;

const canvas = canvasRef.current;

startTime = new Date().getTime();

if (video && canvas) {

video.currentTime = 0;

video.muted = true;

video.play();

video.addEventListener("ended", () => {

encoder.flush().then(() => {

muxer.finalize();

let { buffer } = muxer.target; // Buffer contains final MP4 file

let blob = new Blob([buffer], { type: "video/mp4" });

let url = URL.createObjectURL(blob);

let link = document.createElement("a");

link.href = url;

link.download

link.click();

});

});

}Here’s the final code for the page.tsx state:

"use client";

import { useState, useRef, useEffect } from "react";

import { GPSData } from "@/types";

import { Muxer, ArrayBufferTarget } from "mp4-muxer";

function App({ getTelemetryData }: { getTelemetryData: any }) {

const videoRef = useRef<HTMLVideoElement | null>(null);

const canvasRef = useRef<HTMLCanvasElement | null>(null);

const [gpsData, setGpsData] = useState<GPSData | null>(null);

let videoFramerate = 30; // Video frame rate (e.g., 30 FPS)

let lastFrameTime = 0; // Variable to track the last captured frame time

let framesGenerated = 0; // Variable to track the number of frames generated

let startTime = 0; // Variable to track the start time of the video

let lastKeyFrame = 0; // Variable to track the last key frame time

const muxer = new Muxer({

target: new ArrayBufferTarget(), // Define the output target (in this case, an ArrayBufferTarget)

video: {

codec: "avc",

width: 1920,

height: 1080,

},

// Puts metadata to the start of the file. Since we're using ArrayBufferTarget anyway, this makes no difference

// to memory footprint.

fastStart: "in-memory"

});

const encoder = new VideoEncoder({

output: (chunk, meta) => muxer.addVideoChunk(chunk, meta),

error: (e) => console.error(e),

});

encoder.configure({

codec: "avc1.640028", // H.264 codec with a high profile for 4K (Level 6.2)

width: 1920,

height: 1080,

bitrate: 40000000, // Bitrate (adjust as needed)

avc: { format: "avc" },

framerate: videoFramerate,

bitrateMode: "constant",

});

// Function to get speed based on video current CTS time

// Function to get speed for the nearest date to the video's current time

const getSpeedForNearestDate = (currentTime: number): number | undefined => {

let closestSpeed: number = 0;

if (gpsData?.samples[0].date) {

const startDate = new Date(gpsData?.samples[0].date).getTime();

gpsData?.samples.forEach((sample) => {

const sampleDate = new Date(sample.date).getTime();

if ((sampleDate - startDate) / 1000 - currentTime <= 1) {

closestSpeed = sample.value[3] as number;

}

});

}

return closestSpeed;

};

const renderVideoOnCanvas = (speed: number): void => {

const canvas = canvasRef.current;

if (!canvas) return;

const ctx = canvas?.getContext("2d", {

willReadFrequently: true,

});

if (!ctx) return;

const video = videoRef.current;

if (!video) return;

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

ctx?.drawImage(video, 0, 0, canvas.width, canvas.height);

if (speed) {

const width = 600; // Increase width for a larger speedometer

const centerX = width - 300; // Position in the top-right corner

const centerY = 200; // Adjust vertical position

const radius = 150; // Increase the radius for a larger speedometer arc

const arrowLength = radius * 0.8;

// Draw speedometer background

ctx.beginPath();

ctx.arc(centerX, centerY, radius, 0.75 * Math.PI, 0.25 * Math.PI);

ctx.lineWidth = 40; // Increased width of the speedometer arc

ctx.strokeStyle = "lightgrey";

ctx.stroke();

// Draw speedometer progress

const progress = (speed / 180) * Math.PI - 0.25 * Math.PI;

ctx.beginPath();

ctx.arc(centerX, centerY, radius, 0.75 * Math.PI, progress);

ctx.lineWidth = 40; // Increased width of the progress arc

ctx.strokeStyle = "green";

ctx.stroke();

// Draw speedometer arrow

ctx.beginPath();

const arrowX = centerX + arrowLength * Math.cos(progress);

const arrowY = centerY + arrowLength * Math.sin(progress);

ctx.moveTo(centerX, centerY);

ctx.lineTo(arrowX, arrowY);

ctx.lineWidth = 8; // Adjust arrow width

ctx.strokeStyle = "white";

ctx.stroke();

// Draw speedometer text

ctx.font = "60px Arial"; // Increase font size for text

ctx.fillStyle = "white";

ctx.textAlign = "center";

ctx.fillText(speed.toFixed(1) + " km/h", centerX, centerY + radius + 40); // Adjust text position

}

};

const loadVideoGPSData = async () => {

const videoURL = "GoPro.mp4";

if (videoRef.current) {

videoRef.current.src = videoURL;

}

const telemetryData = await getTelemetryData(videoURL);

setGpsData(telemetryData[1].streams.GPS5);

};

let animationFrameId: number | null = null;

const updateCanvas = () => {

const video = videoRef.current;

if (!video) return;

const currentTime = video.currentTime;

const speed = getSpeedForNearestDate(currentTime);

const canvas = canvasRef.current;

if (speed !== undefined) {

renderVideoOnCanvas(speed);

}

if (canvas) {

let elapsedTime = video.currentTime - startTime;

let frame = new VideoFrame(canvas, {

timestamp: (framesGenerated * 1e6) / videoFramerate,

});

framesGenerated++;

// Ensure a video key frame at least every 10 seconds for good scrubbing

let needsKeyFrame = elapsedTime - lastKeyFrame >= 10000;

if (needsKeyFrame) lastKeyFrame = elapsedTime;

encoder.encode(frame, { keyFrame: needsKeyFrame });

frame.close();

lastFrameTime += (video.currentTime * 1e6) / videoFramerate;

}

animationFrameId = requestAnimationFrame(updateCanvas);

};

const handleVideoPlay = () => {

if (!animationFrameId) {

animationFrameId = requestAnimationFrame(updateCanvas);

}

};

const handleVideoPause = () => {

if (animationFrameId) {

cancelAnimationFrame(animationFrameId);

animationFrameId = null;

}

};

useEffect(() => {

const video = videoRef.current;

if (video) {

video.addEventListener("play", handleVideoPlay);

video.addEventListener("pause", handleVideoPause);

return () => {

video.removeEventListener("play", handleVideoPlay);

video.removeEventListener("pause", handleVideoPause);

};

}

}, []);

const createVideoFromCanvasFrames = async () => {

const video = videoRef.current;

const canvas = canvasRef.current;

startTime = new Date().getTime();

if (video && canvas) {

video.currentTime = 0;

video.muted = true;

video.play();

video.addEventListener("ended", () => {

encoder.flush().then(() => {

muxer.finalize();

let { buffer } = muxer.target; // Buffer contains final MP4 file

let blob = new Blob([buffer], { type: "video/mp4" });

let url = URL.createObjectURL(blob);

let link = document.createElement("a");

link.href = url;

link.download

link.click();

});

});

}

};

return (

<div>

<div className="grid grid-cols-2 gap-4">

<video

style={{

height: "auto",

objectFit: "contain",

}}

ref={videoRef}

controls

></video>

<canvas

style={{

height: "auto",

objectFit: "contain",

width: "100%",

}}

ref={canvasRef}

/>

</div>

<div className="flex flex-col align-middle items-center gap-4 mt-8">

<button

onClick={loadVideoGPSData}

type="button"

className="rounded bg-white px-2 py-1 text-xs font-semibold text-gray-900 shadow-sm ring-1 ring-inset ring-gray-300 hover:bg-gray-50"

>

Load video and GPS data

</button>

<button

type="button"

onClick={createVideoFromCanvasFrames}

className="rounded bg-indigo-600 px-2 py-1 text-sm font-semibold text-white shadow-sm hover:bg-indigo-500 focus-visible:outline focus-visible:outline-2 focus-visible:outline-offset-2 focus-visible:outline-indigo-600"

>

Create a video from the canvas

</button>

</div>

</div>

);

}

export default App;Et voila, you've now successfully integrated telemetry data overlays into your GoPro videos using the WebCodecs API.

This guide has walked you through the process from initial setup to the final production of a video that not only captivates visually but also provides valuable insights through the overlaid data. Whether you're analyzing performance, telling a more compelling story, or simply adding a professional touch to your videos, the skills you've acquired here open up a new realm of possibilities.

Thank you for following along with this tutorial. We're excited to see the dynamic and informative videos you'll create with your newfound knowledge. If you have any questions, suggestions, or would like to share your projects, feel free to reach out. Happy filming!